Blog

by Nortal

Applying GitOps patterns to infrastructure and delivery pipelines using Argo CD

The scattered deployment manifest around the codebase? Hidden configurations? Manually altered and undocumented environment setups? Meet GitOps.

GitOps is a versioned CI/CD on top of your declarative infrastructure. Having a clear overview of the desired state in any environment allows for easy error recovery and provides self-documenting deployments. There’s then no need to SSH into servers and wonder what went wrong. You always have access to the entire history of every change made to your cluster.

Motivation

One of our primary goals was to integrate an end-to-end test suite as part of our CI process in the feature branches before being able to merge. All while ensuring quick feedback, keeping the developer experience sane, and not sacrificing time waiting for the pipeline to pass or fail. An additional goal was to spawn fresh environments on-demand and effortlessly recreate existing environments if needed. Our application fleet consists of about 20 standalone APIs and a couple of databases. Having various deployment manifests and configuration descriptors scattered across the codebase caused countless problems when trying to develop a deployment-coordinated setup. While migrating all deployments onto Kubernetes and redesigning our delivery workflows and processes, it was clear that the usual approach was not efficient. Deploying numerous Helm charts and namespaces for environments through scripts directly from CI was not enough. It did not allow us to oversee different versions used across the cluster and did not provide a proper way of versioning the state of the cluster. To keep all configurations in sync and apply the desired state directly and only from Git, we decided to pick up Argo CD.

Argo CD

Argo CD is a declarative, GitOps continuous delivery tool for Kubernetes. Application definitions, configurations, and environments should be declarative and version controlled. Application deployment and life cycle management should be automated, auditable, and easy to understand. Argo CD follows the GitOps pattern of using Git repositories as the source of truth for defining the desired application state. It automates the deployment of the desired application states in the specified target environments. Application deployments can track updates to branches and tags or be pinned to a specific version of manifests at a Git commit. Argo CD is implemented as a Kubernetes controller that continuously monitors running applications and compares the current live state against the desired target state. Any modifications made to the desired target state in the Git repository will be automatically applied and reflected in the specified target environments. For Getting Started with Argo CD, follow their tutorial.

How does it work?

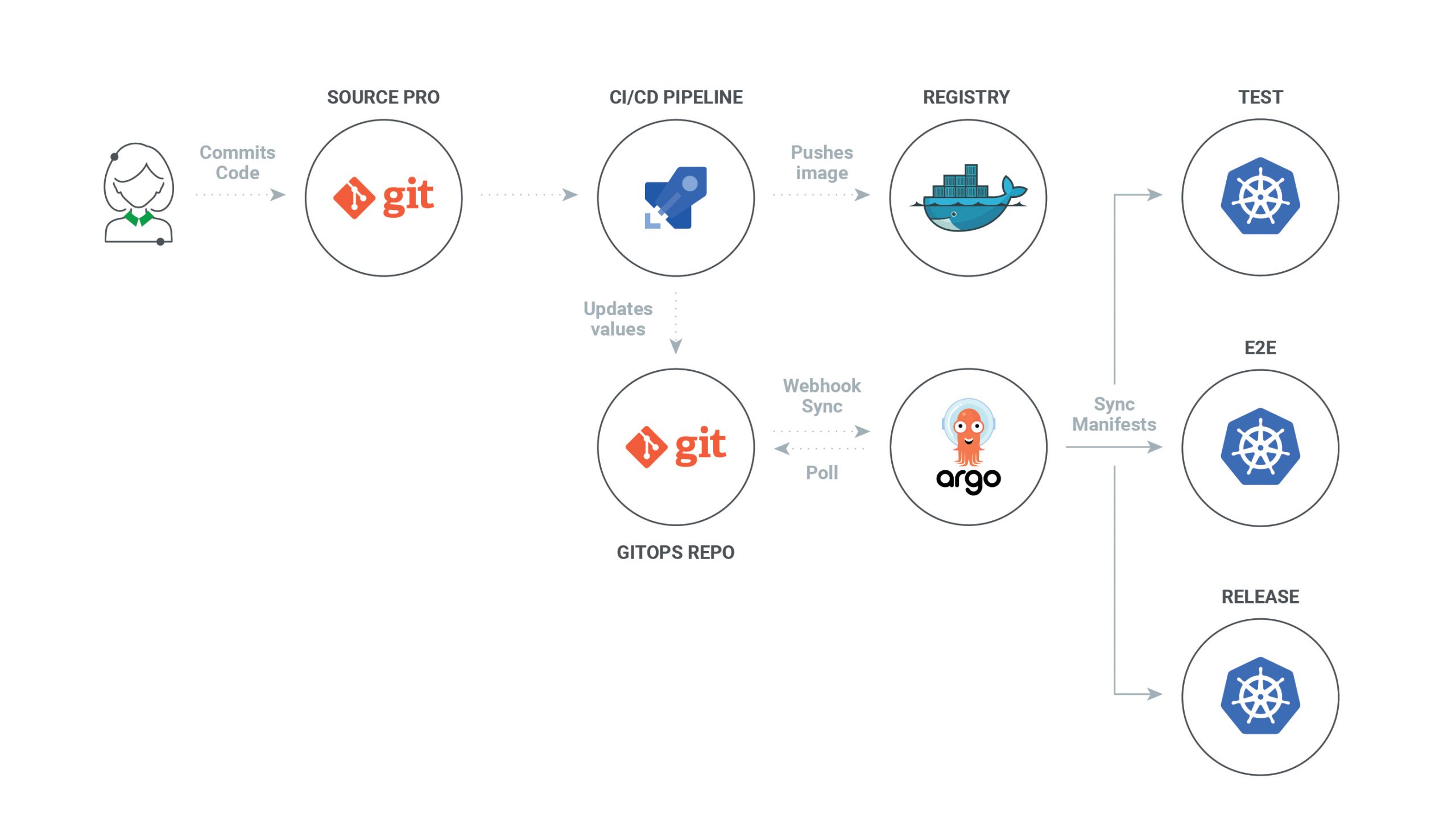

After a successful CI pipeline, new images are pushed to the Docker registry, and respective image tags are committed into the GitOps repository. Sync action is triggered through webhook action from CI after the commit or after a regular polling interval. Argo CD runs as a controller on the Kubernetes cluster, which continuously monitors running applications and compares the current live state against the desired target state (as specified in the GitOps repo). The controller detects OutOfSync application state, which is done by diffing Helm manifest template outputs and optionally taking corrective action.

The App of Apps pattern

sourcereference to the desired state in Gitdestinationreference to the target cluster and namespace

You can create an app that creates other apps — which, in turn, can create different apps. This allows you to declaratively manage a group of applications that can be deployed and configured in concert. Let’s take a look at the components required for this kind of setup.

Application from Argo CD. We put all of these definitions in the app-charts directory.{{- if .Values.enabled }}

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: {{ .Release.Namespace }}-{{ .Chart.Name }}

namespace: argocd

labels:

app: api

finalizers:

- resources-finalizer.argocd.argoproj.io

spec:

syncPolicy:

automated: {}

destination:

namespace: {{ .Release.Namespace }}

server: https://kubernetes.default.svc

project: nortal

source:

path: charts/api

helm:

releaseName: {{ .Chart.Name }}

parameters:

- name: fullnameOverride

value: {{ .Chart.Name }}

- name: image.repository

value: {{ .Values.image.repository }}

- name: image.tag

value: {{ .Values.image.tag }}

- name: replicaCount

value: "{{ .Values.replicaCount | default 1 }}"

- name: ingress.enabled

value: "{{ .Values.ingress.enabled }}"

{{ range $i, $host := .Values.ingress.hosts }}

- name: ingress.hosts[{{$i}}]

value: {{ $host }}

{{ end }}

- name: ingress.hostname

value: {{ .Values.global.ingressHostname }}

- name: serviceMonitor.enabled

value: "true"

repoURL: {{ .Values.global.source.repoURL }}

targetRevision: {{ .Values.global.source.targetRevision }}

{{- end -}}

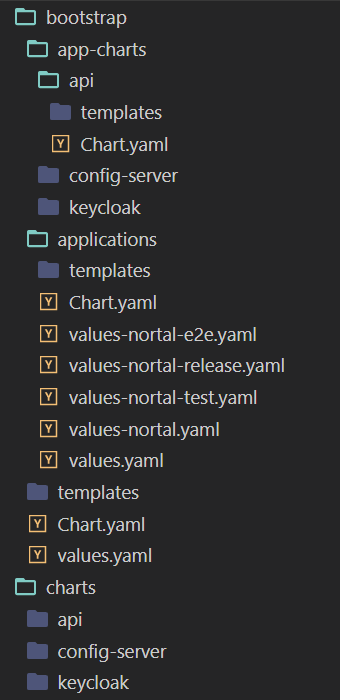

At the core is the applications folder that holds base values for image repositories, common values, and environment-specific overrides. The entire deployment stack is configured through Chart.yaml using Helm’s dependencies. Helm allows you to reuse the same chart for dependent deployments using an alias. Through that, we can define unique applications that deploy using the same chart defined downstream. The alias name correlates with the values files that define proper overrides for each application.

dependencies:

- name: api

alias: assignment

version: 0.1.0

repository: file://../app-charts/api

- name: api

alias: benefit

version: 0.1.0

repository: file://../app-charts/api

- name: api

alias: dashboard

version: 0.1.0

repository: file://../app-charts/api

- name: api

alias: file

version: 0.1.0

repository: file://../app-charts/api

- name: api

alias: finance

version: 0.1.0

repository: file://../app-charts/api

Anyone familiar with Helm knows that nothing works without values.yaml. Here, we define all default values, such as the source repository, for tracking changes — and its respective target revision, image repositories, and for example, enabling Ingress along with their exposed hosts.

global:

source:

repoURL: https://gitlab.com/gitopsisawesome/git-ops.git

targetRevision: HEAD

ingressHostname: nortal.com

assignment:

enabled: true

image:

repository: docker.registry.com/assignment-api

ingress:

enabled: true

hosts: [office]

benefit:

enabled: true

image:

repository: docker.registry.com/benefit-api

ingress:

enabled: true

hosts: [portal]

dashboard:

enabled: true

image:

repository: docker.registry.com/dashboard-api

ingress:

enabled: true

hosts: [portal]

file:

enabled: true

image:

repository: docker.registry.com/file-api

ingress:

enabled: true

finance:

enabled: true

image:

repository: docker.registry.com/finance-api

ingress:

enabled: true

hosts: [office]

values-nortal-e2e.yaml, which contains environment-specific overrides, are always bundled together with default values. Note: These environment-specific values are only updated through the CI pipeline after a successful run.

destination:

namespace: e2e

global:

source:

targetRevision: HEAD

springCloudProfiles: nortal-e2e

assignment:

image:

tag: build-49191

benefit:

image:

tag: build-49954

dashboard:

image:

tag: build-50117

file:

image:

tag: build-46620

finance:

image:

tag: build-48669

The parent application is another piece that sits on top of all the other resources mentioned above. This definition receives all of its properties from respective values files, which are determined by command-line arguments passed into the Helm install command. This template can be used to set up persistent environments but also to deploy new environments on demand. We call the on-demand environments Feature or Review apps. This kind of arrangement allows us to deploy a new isolated environment directly from the feature branch pipeline. With a single click and single command, we override the image built and published specifically from that feature branch.

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: "{{ .Values.destination.namespace }}"

namespace: argocd

spec:

project: nortal

syncPolicy:

automated: {}

destination:

server: https://kubernetes.default.svc

namespace: "{{ .Values.destination.namespace }}"

source:

path: bootstrap/applications

repoURL: {{ .Values.global.source.repoURL }}

targetRevision: {{ .Values.global.source.targetRevision }}

helm:

valueFiles:

- values.yaml

- values-{{ .Values.destination.cluster }}.yaml

- values-{{ .Values.destination.cluster }}-{{ .Values.observe.imageSet }}.yaml

parameters:

{{ if .Values.appName }}

- name: {{ .Values.appName }}.image.tag

value: {{ .Values.appTag }}

{{ end }}

Finally, there is the charts directory that holds basic Helm chart templates, for example, API, which is the most common chart suitable for the majority of microservices. These contain all known Kubernetes resources like deployments, services, ingresses, config maps, etc. Deploying a new environment means running a single Helm install command:

helm upgrade -i ${CI_BUILD_REF_SLUG} bootstrap -n argocd \

--set observe.imageSet=e2e \

destination.cluster=nortal \

destination.namespace=${CI_BUILD_REF_SLUG} \

appName=finance \

appTag=build-${CI_PIPELINE_ID}

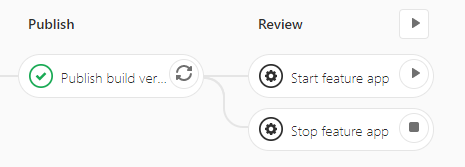

We use this approach in our feature branch pipelines, where we leverage GitLabs review app capabilities. Example feature branch pipeline stage:  Our GitOps repository appears like this by combining everything described above:

Our GitOps repository appears like this by combining everything described above:

Takeaways

There are not many tools available that get the job done, as well as Argo CD. It integrates seamlessly into the Kubernetes and Helm engines and allows you to jump into the GitOps mindset immediately. Other than, perhaps, having to write a lot of yaml, I cannot find any negatives to adopting this workflow. Our team has not recently had a reason to use the Kubectl command line to track down any deployment issues or make any changes to the setup. Manually altering manifests has no effect anyway since the single source of truth is always in Git.

Get in touch

Let us offer you a new perspective.