Article

by Nortal

Containers: The software development lifecycle’s last mile

The software delivery industry has developed into a highly pragmatic practice forged from relentless questioning and refactoring of our collective delivery processes. Over the last decade, the industry has moved from software builds on specific, blessed individual developer machines, to an automated and sophisticated build pipeline that allows developers increase feedback at all stages of the software development lifecycle.

As the build pipeline has evolved, so have our methods for delivery. We have progressed significantly from bare-metal, single installation monolithic applications, and introduced an abstraction of the physical hardware. This is accomplished by utilizing virtualization and adding an additional layer of abstraction in containers, decoupling the lightweight applications from the server hosting the application.

This article will show how containerization has provided developers with the last abstraction required to decouple a development team from the traditional Software Development Lifecycle (SDLC) established over a decade ago.

The software development renaissance – uncovering an efficient path

The software development lifecycle (SDLC) has been evolving for over five decades, but was widely adopted and refined by a wide audience beginning in the early 1980’s. We can call this the SDLC renaissance. Let’s start our journey there.

In the 80s, software development had moved off of mainframes and onto individual PCs, requiring individuals to share source code between machines both locally and remotely. Concurrent Version System, or CVS, was introduced in 1986 and was widely adopted by the late 80s and early 90s. Many problems surfaced with the first implementation of remote source control. Chief among them was the difficulty in adhering to a process for building software. At the time, there were several SDLC models, some of which had existed since the 1940’s. These models were unable to meet the growing needs of large groups of distributed contributors. On top of that, many of the contributors began as hobbyists and did not have the same fundamental knowledge as the previous, highly-educated generation. This resulted in shortcuts being taken that often led to inefficient and damaging practices such as binary code being sent by FTP, email, network file system folders or physical medium via “sneaker net.” To make matters worse, most builds were conducted on an individual developer’s machine, adding yet another variable to the build process. All these things together greatly reduced the repeatability of builds should the team need to re-construct or investigate a previous version of the application.

Once a build produced an artifact, the software then faced the challenges of installation of deployment. Services typically required long downtimes combined with very complicated, highly-manual procedures to deploy new versions of the codebase. As a result, the time-to-market increased and companies made use of this “extra” time to pack more features (and thus more risk) into each new version. Additionally, companies increased their dependence on their IT teams, many of which had little or no software development skills. This created a knowledge gap and frequent, contentious meetings between developers and IT.

The deployed solutions relied heavily on the embedded dependencies and libraries of the host operating system creating a dependency on the Operating System. Operating systems provided a few technologies to aid the IT teams with deployment, but the automation tools were vastly inadequate by today’s standard.

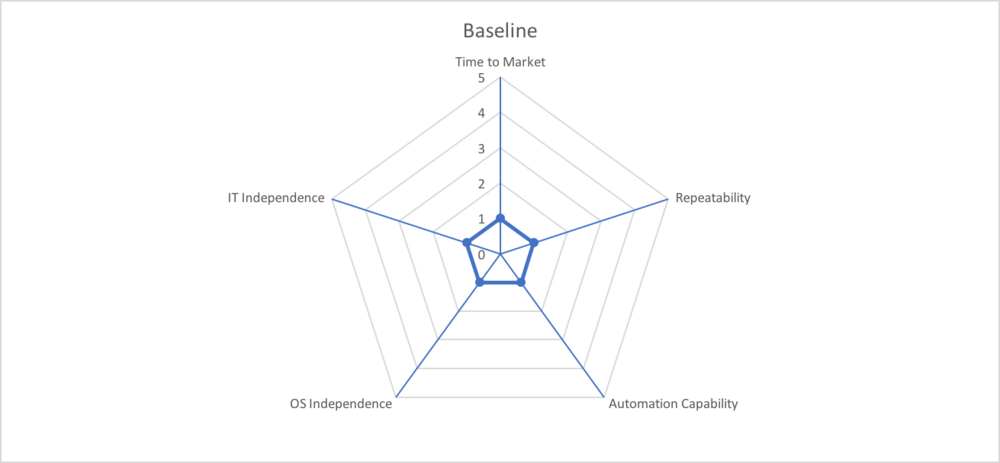

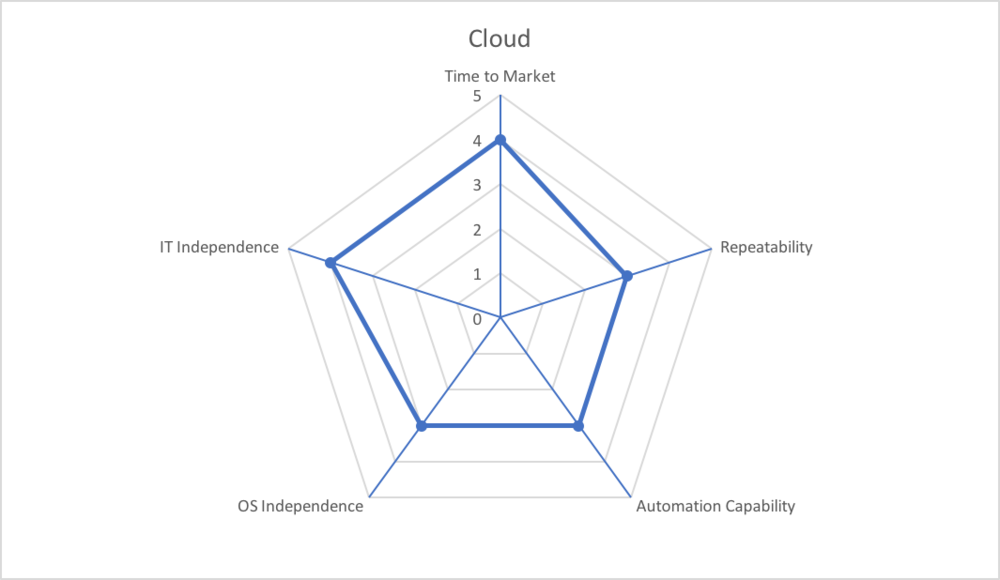

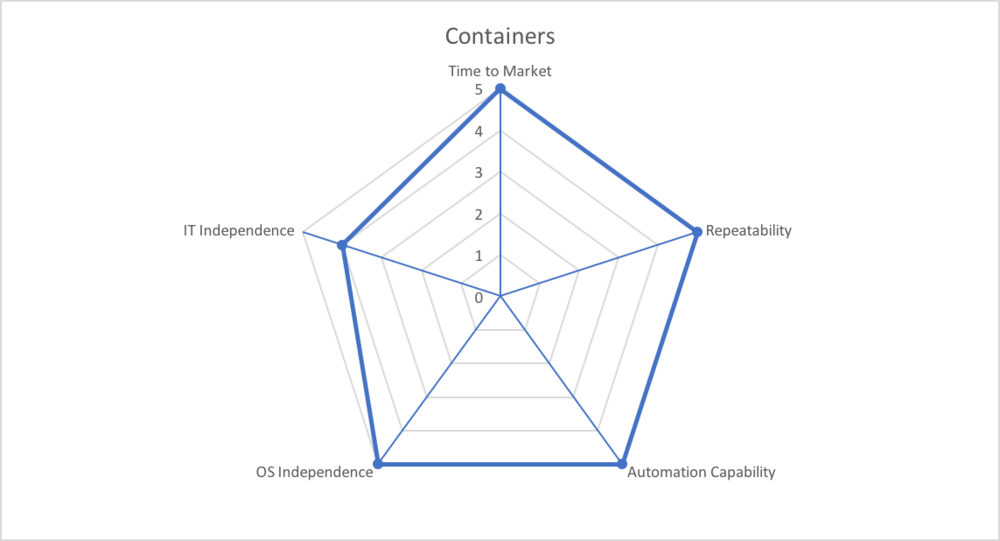

In order to track the progress of the SDLC, we will quantify each major milestone using five main variables. A one (1) indicates the value is very low or almost no presence where a five (5) indicates high capability.

|

Time to market |

This is the measure of how fast a single change can be introduced and deployed to a production environment. |

|

Repeatability |

The ability for a build to be repeated resulting in the same binary. Repeatability also pertains to the ability to deploy the same binary artifact without any modification. |

|

Automation capability |

The measure of how much automation is possible and the relative usefulness automation would bring. Additionally, automation capability is a measure of how manual a process is. |

|

OS independence |

Measure of coupling between the environment and/or Operating System (OS) has between the end application. Greater OS independence allows for applications to be run in a wider range of server and delivery locations. |

|

IT independence |

How coupled is the delivery of software on the Information Technology team(s). |

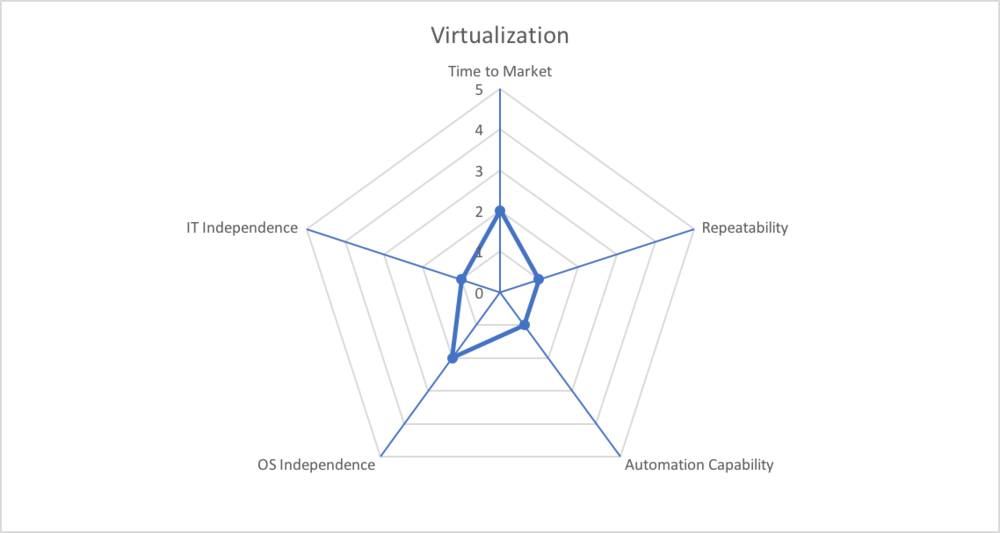

Virtualization: The first mile

Provisioning new environments would often take months and require the developers to have intimate knowledge of hypothetical server capabilities. Furthermore, IT personnel were required to understand the basics of running the application to ensure the correct servers were ordered to achieve the desired scale, business objectives and cost efficiency.

The first major change to the deployment process happened when virtualization became widely adopted. Two key features provided by virtualization improved the delivery pipeline. The first was the abstraction between physical hardware and the servers that were created virtually on top of the hypervisor. This abstraction allowed teams to create servers with the operating system most appropriate for their work. This helped decouple the software and the operating system, reducing the OS dependency.

The second major benefit was the decreased time-to-market. Development teams could request provisioned servers much more rapidly. Decreasing the time required to provision new environments allowed developers to try new processes of development without tying up precious resources.

While virtualization provided more OS independence and faster time-to-market, a significant human intervention was still required for provisioning infrastructure and deploying new changes. Additionally, virtualization required a specialized skill set to configure, manage, and maintain the virtualized software, resulting in an even wider knowledge gap between development and IT. This was only made worse by the high cost of training personnel on how to operate the tools needed to virtualize the software. Though it added efficiency, virtualization did not bring any significant improvements to software delivery processes.

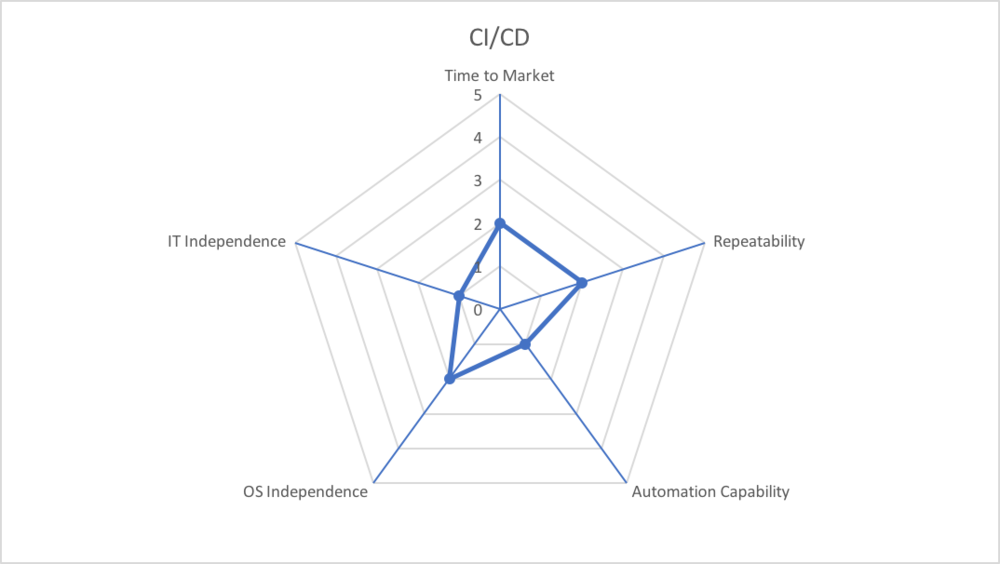

Building the development highway with continuous delivery

With the increased efficiency provided by virtualization, additional servers could be requisitioned to help build software. The most important tool developed to accomplish this came from the Continuous Delivery (CD) movement, largely born out of the Agile SDLC process.

Continuous Delivery utilizes servers to compile, test and package the project using machines specifically designed to build software. This technique provides many benefits, but the two most important to software delivery were the introduction of standardized builds and immutable binary artifacts. Standardized builds removed the developers “blessed” machine from the build cycle and allowed all builds to be consistently done on a machine that was not used for daily development. This practice increased the security and repeatability for all builds while providing tangential benefits like transparency, fast feedback through automated testing suites and accountability for broken builds.

The second major benefit gained when implementing CD is the production of versioned, immutable binary artifacts. Builds were no longer built on a production server or a reconstituted environment through crazy rsync or file copy scripts. Binaries were placed on a separate server that managed dependencies, allowing the project to pull versioned artifacts in as dependencies as necessary.

The introduction of CD practices was revolutionary for the SDLC, but there were still several key areas that required attention. While the binary repository offered on-demand retrieval of binary artifacts, the deployment process was still highly dependant on IT. In a Java-based web application, the CD pipeline would generate and upload a versioned WAR file to the artifact repository, but the deployment would require that the artifacts be pulled down manually or with a single-purpose script requiring manual execution. Additionally, deployments were still being pushed to largely static environment, despite the use of virtualized environments.

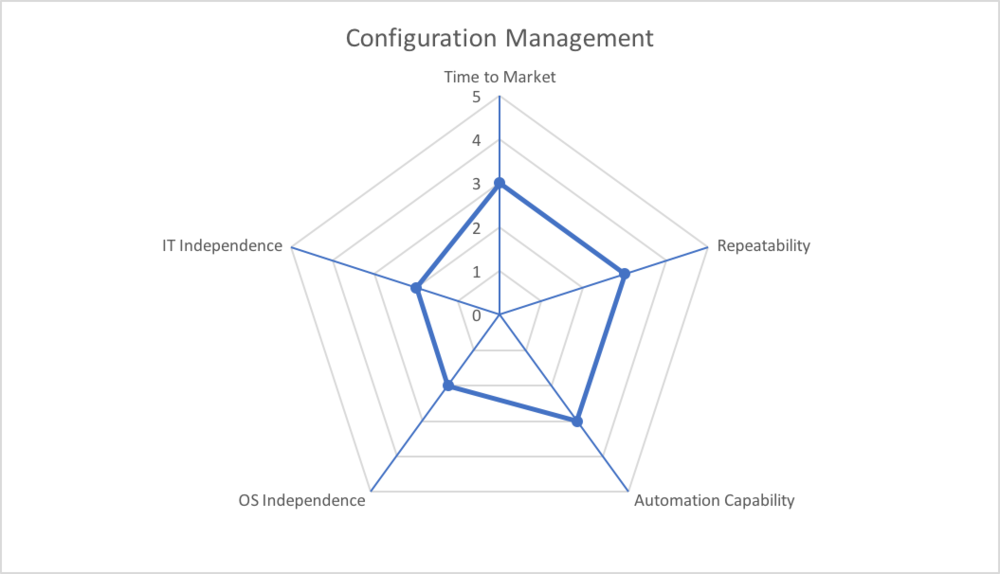

A break in the clouds

Continuous Delivery provided a model to automate build, increase quality and improve repeatability, but it was initially used only for software development. The next major evolution in the maturity of the delivery pipeline was with Configuration Management (CM) tools. These tools were designed to write software to automate the creation and management of infrastructure. Utilizing enhancements in virtualization tools, CM tools allowed developers and IT teams to start working together to engineer repeatable automated infrastructure. The concept of using code to create and manage infrastructure is known as Infrastructure as Code or IaC. It was initially designed to manage server resources and their associated configurations, but was often extended to include an automated deployment process. These tools combined with deployment automation created an abstraction between the deployment process and the servers they ran on. By simply changing a variable and properly running an engineered script, an IT team member could deploy a particular version of an artifact into a variable controlled environment. These automation and process changes helped decrease time-to-market while providing better repeatability and reliability. The dependance on IT staff was reduced due to API capabilities introduced in the second generation of CM tools. Now developers could utilize automation tools to replicate clustered environments on their local machines or rapidly provision entirely new environments.

While the new tools and more sophisticated automation dramatically reduced manual intervention and decoupled build pipelines, the artifacts were still being deployed to static environments simply because of the cost limitations of expanding data centers. Those static environments still contained dependencies that were not always captured by CM tools and thus they remained “snowflake” servers. IT and development teams were closer together than ever, but their responsibilities were still separated.

The possibility of the cloud

Public cloud technology began to rise in popularity as companies started to realize that their data centers were large cost centers that were not flexible enough to allow developers the needed capacity when working on large or complex solutions.

Public cloud offerings have been around for over a decade and have gained wider adoption in the last three to five years. Public cloud services combine automation, configuration management, virtualization, provisioning, reduced time-to-market and all of the benefits that those features bring. Additionally, utilizing a public cloud closes the gap between the delivery team from it’s IT dependency by combining the two teams together allowing for better organizational alignment.

Using a public cloud as the delivery end of a software build pipeline contains one last coupling. With the exception of the Netflix Spinnaker tool (replaced Asgard), applications are still being deplored using a process of combining newly created environments with versioned binary artifacts. This process is not easily portable and requires a great deal of effort should the underlying machines change.

New horizons

Creating a new infrastructure every time a deployment is done increases the time-to-market of software. The development process needs an abstraction between the machine and the artifacts. Containers serve as a perfect abstraction allowing a build pipeline to construct a mini-server as the binary artifact, streamlining the deployment process. Container technology providers have worked on establishing a standard called Open Container Initiative (OCI). This community initiative allows the versioned container artifacts to be highly portable across multiple cloud or container services. Out of the container-based development pattern, a new architectural pattern emerged where applications inside containers carry minimum configuration and reach out to external configuration servers during bootup. Configuration servers allow the container artifacts to act more like business compute machines rather than static servers providing higher degrees of portability and flexibility. With a simple change of an environment variable, a container can run as a production or lower level environment service. External configuration allows development pipelines to continue to test the same immutable artifact through a progression of environments which follows one of the core principles defined in Continuous Delivery.

Supporting the container delivery pipeline architecture continues to push the definition of application and software delivery. Container-based solutions that utilize external configuration allow each container-based artifact to effectively become a business logic compute engine. Container artifacts reach out and pull in configuration upon startup, allowing the application to boot up in a logical environment. This further abstracts the concept of an environment and, at the same time, adds greater support for the core continuous delivery concepts of immutability.

Is this the end of the journey?

Heraclitus once said, “the only constant is change.” This quote is a great way to describe the industry’s pragmatic approach to generating higher quality, more transparent and predictable software. After the explosion of new developers in the 80’s and 90’s, the industry has improved software delivery through a series of iterations. Virtualization provided an abstraction from physical machines allowing faster time-to-market. Continuous Delivery added a consistent and predictable model for delivering high-quality, versioned artifacts. Configuration management added a degree of repeatable automation via code, for both the infrastructure and software. Public clouds provide an environment fostering innovative software build tools that take advantage of an almost inexhaustible set of resources. Containers provide the last major innovation decoupling build artifacts from their accompanying servers.

Is this the end of innovation? If history is any indication of innovation, we will continue to see future advances in software delivery methodology. Perhaps the next journey will come from AI-based software revolutionizing software development as we know it.

Starting the journey?

Our seasoned team members have been down the development path many times. Many of our developers were part of the software renaissance, and we continue as a team to seek out the next frontier in development. We are committed to helping our clients clear the path to develop the best software possible. Software that will stand the test of time and maximize business operations while meeting organizational needs. Contact us for help on your journey.

Get in touch

Let us offer you a new perspective.